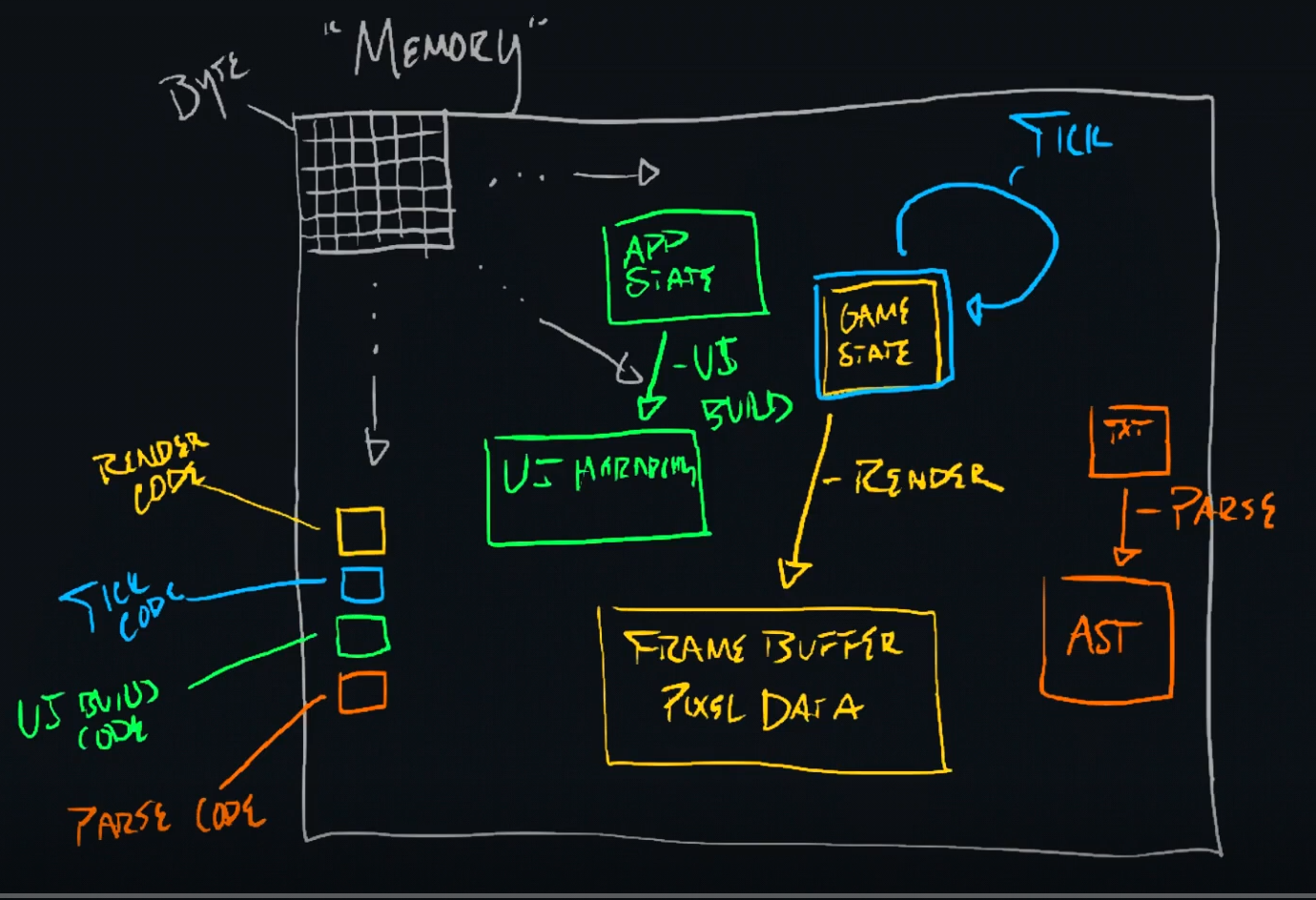

Manual Memory management

On Computer systems, memory is managed in two different ways

- The OS has to manage physical memory and provide mechansims and policies for sharing that memory between processes.

- Processes need to managae their own Virtual Address space provided for them by the OS. Some languages provide garbage collection to manages memory but regardless memory is being managed. In C/C++ the programmer manages the memory manually.

All computer programs do is transform data from A to B. The Code is in memory, data object A is in memory, and the results of computation data object B is stored somewhere in memory as well.

virtual memory

What is a virtual address space?

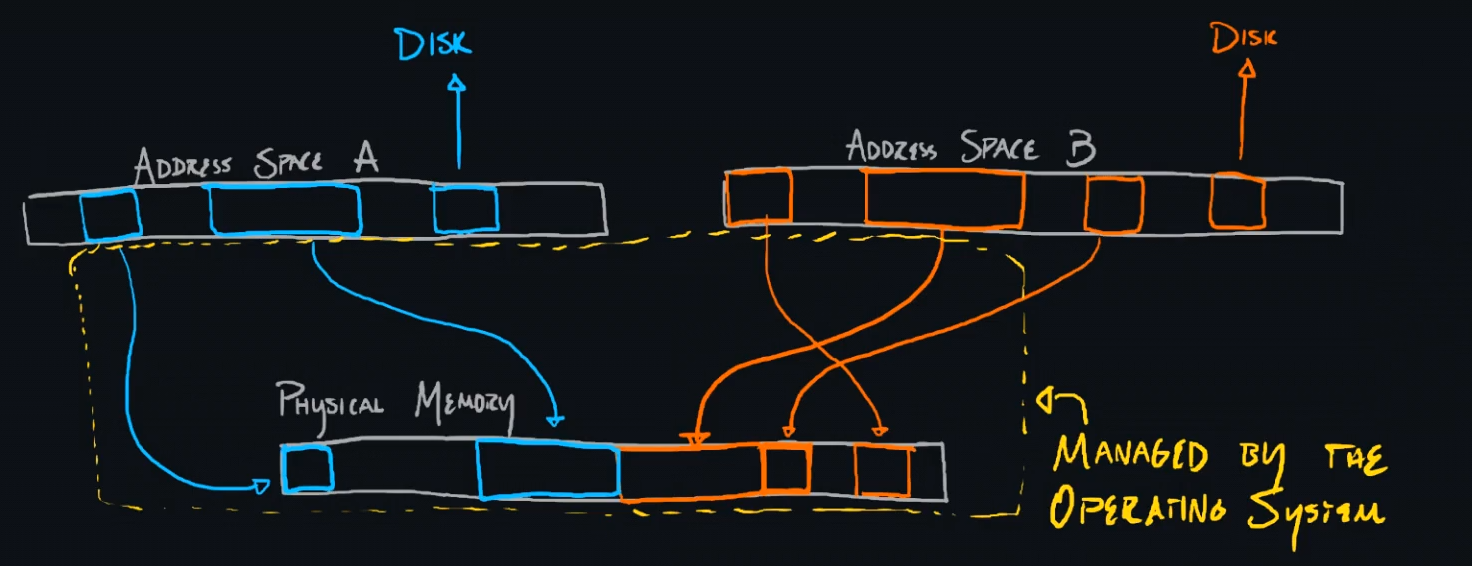

Proceses cannot directly address actual physical memory using physical memory addresses.. Processes have their own virtual address space. The cpu and OS translate virtual addresses to physical addressed on behalf of each process.

This allows the OS a mechanism for sharing memory between processes.

Using this model the OS is able to provide a large, effecient and private memory space for each process running on a system.

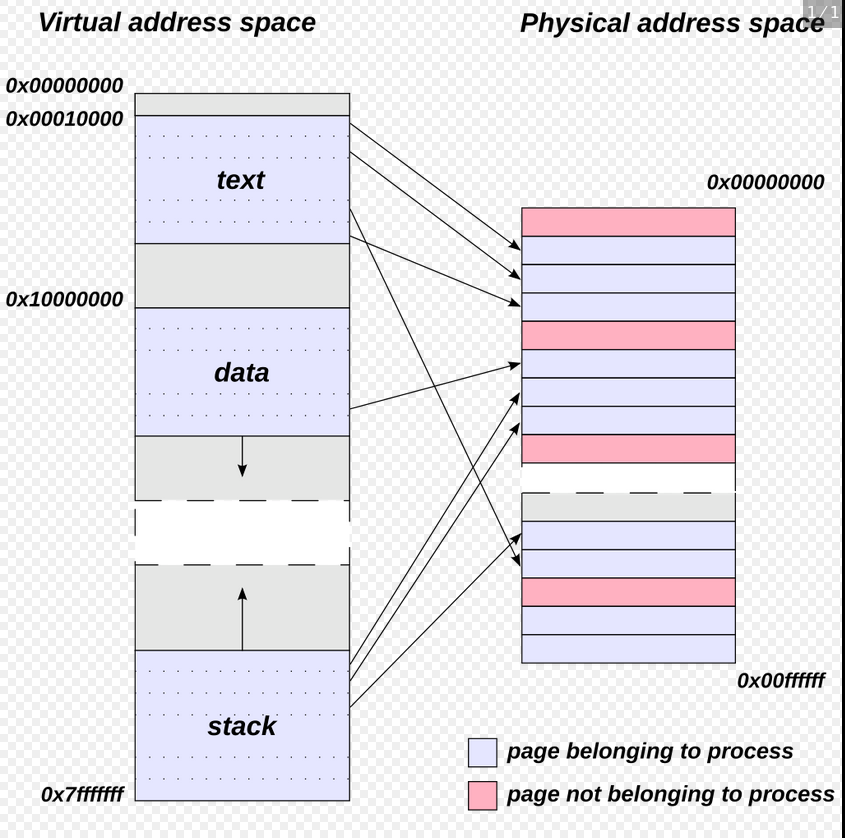

PAGING

ON The OS mgmt side, the os divides the full set of addresses into 4kb pages. When a process starts the OS allocates memory for each of it's sections. During runtime a process may also requests memory using syscalls, the OS maps memory by the page when the OS manages memory. So each of the processes segments are alocated n number of pages. They each start and finish on some page boundary.

On the surface Virtal memory systems give the appearance that the programmer has unlimited memory to play with. But this is not really the case. If it was we wouldn't need any memory management at all.

When writing code for some hardware like phones, wearables, and game console, memory limitiations have to be taken into even more consideration.

Anytime you request memory you introduce a failure point into your program. Memory is a limited resource, When you request memory from the OS it can fail. Modern OS, make it seem like we have infinite memory but once a program starts thrashing, (overusing harddrive swapspace) it's performance will suffer greatly.

PROCESS MEMORY

Where does process memory come from?

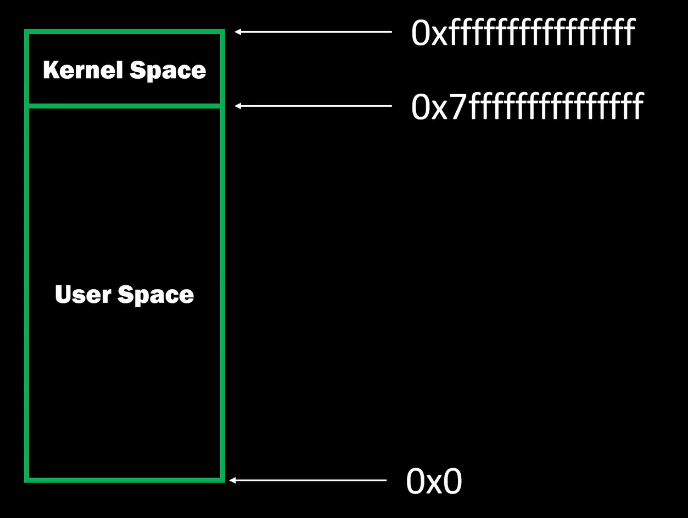

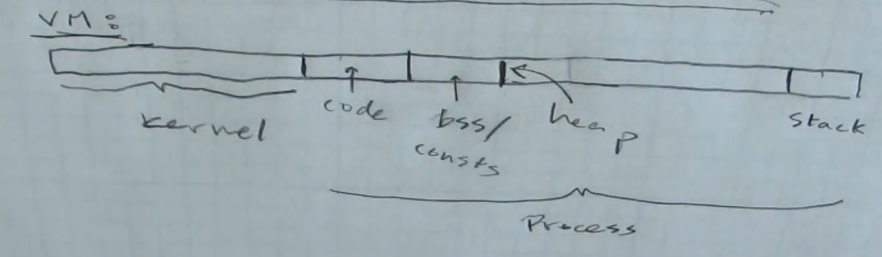

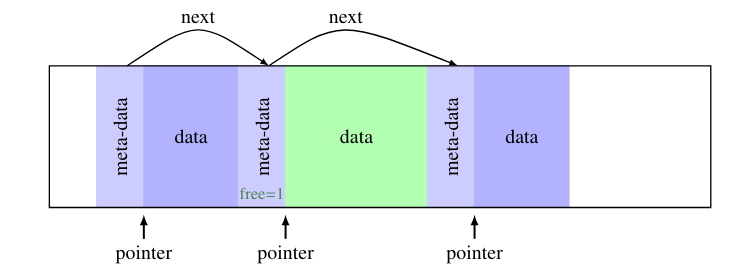

The address space of a process is called it's Virtual address space. A processes address space is divided into two sections, kernel space and user space

Process addresses do not directly reference bytes of physical memory but are translated into actual physical addresses by the CPU and OS

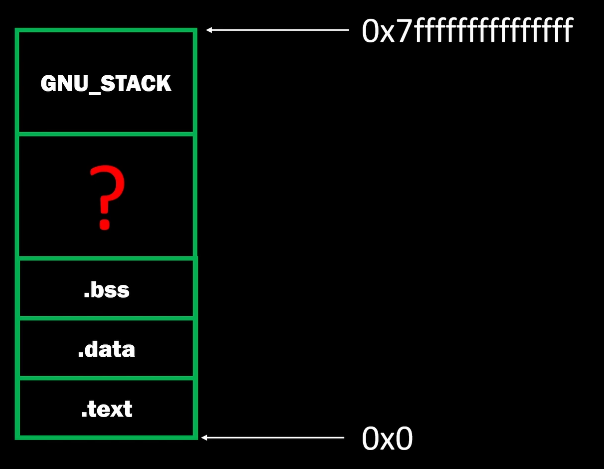

The User space portion of a processes virtual address space is further divided into subsections. Each subsection is aligned by page boundaries usually 4kb

- .text - actual cpu instructions, the $rip register usually is pointing to this area

- .data - constants and initialized static global variables.

- .bss - uninitialized global and static variables

- .the - stack starts just below kernel space and works it way down. As we have studied in previous classes. Stack Memory is grows and shrinks as the function call stacks gets deeper and shallower

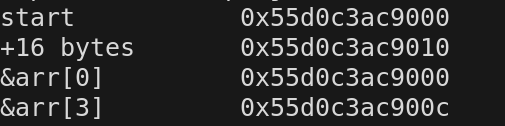

- Just above the .bss section are many available unmapped addresses. This area give the programmer flexiblity to for to manage additional memory that may be required at runtime.

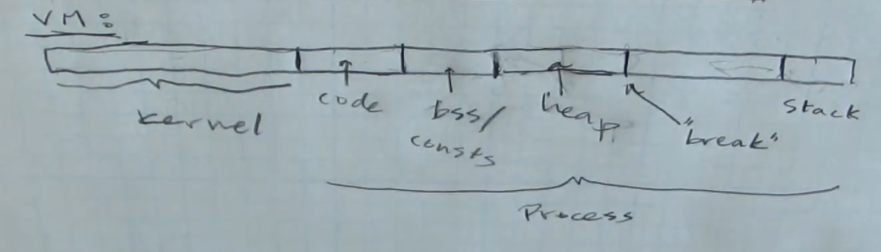

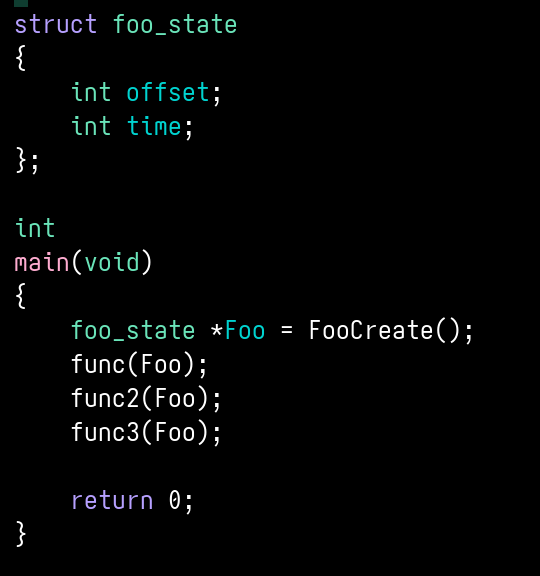

The heap is the set of addresses between the BREAK and the top boundary of .bss. As the break moves towarsds the stack, the heap grows.

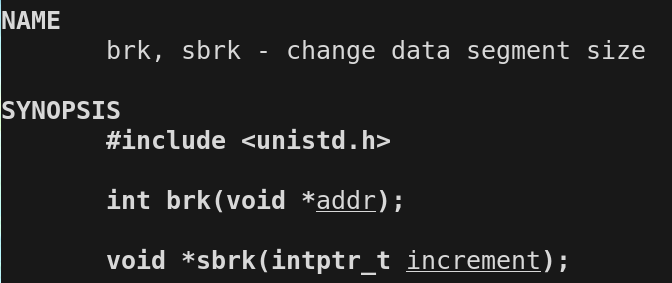

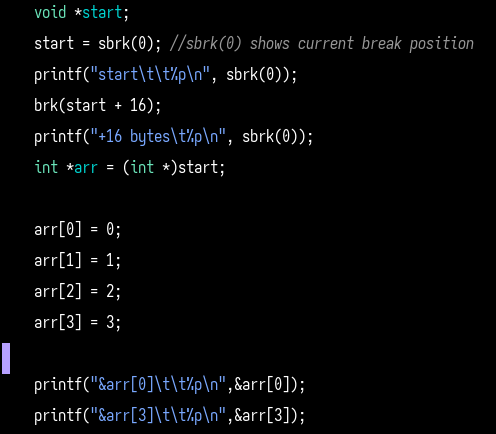

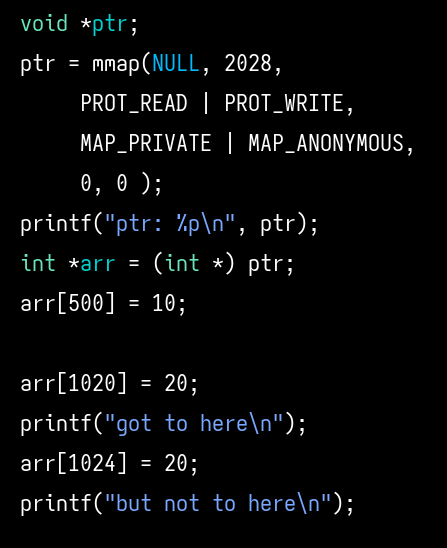

To move the break and create space for the heap, linux provides a pair of system calls called sbrk() and brk()

As you can see from the man page screen shot. sbrk() takes an offset(-,+). brk() takes an actual target address

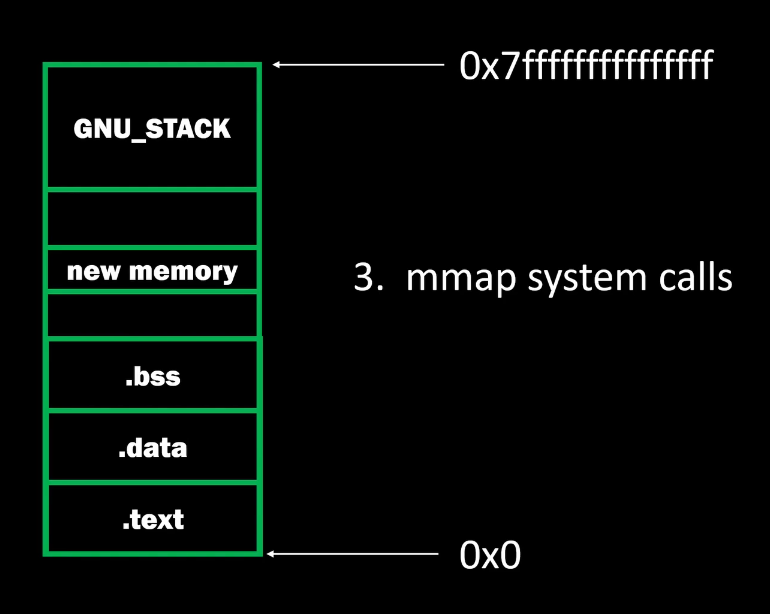

The mmap systems asks the os to give a process memory in the free mem area.it does not rely on the BREAK

mmap() maps full pages of memory, on page boundaries, wether the programmer requests them or not.

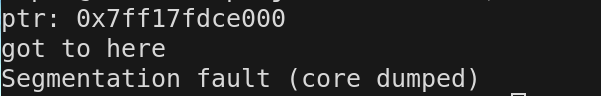

In the previous code you will notice that although we only requested 2048 bytes we were able to write an int to the 4092 byte. That is within one page of memory from the start of the arr.

We can't write to arr[1024] because we are already trying to write outside the allocated page, thus we get a segmentation fault

You can use munmap() to unmap any OS allocated pages.

Syscalls vs function calls

There are certain operations that only the kernel(The OS code) is allowed to perform.

When a user process makes a system call, the cpu automatically changes itself into kernel mode and jumps into actual kernel code to execute some kernel routine that provide some service back to the user process. When it is done it returns back to the user process code.

A function call is a user routine. A syscall is a kernel routine.

You can use the command strace to observe all the syscalls made by your process.

You can see the mmap calls in the following strace setting up each of the segments for the process. These calls are made by the c runtime

Internal Allocators and Allocation Strategies

The builtin stack

You may ask, why can't we just rely on the stack? all allocation and dealloaction happens automatically on the stack. This takes a conginitive load off of the programmer. The lifetimes of the memory on the stack are dictated by the scope of each function call. Memory is allocated and deallocated as we enter and exit functions.

The type of allocation done by the builtin stack is called STATIC allocation. With static allocation the SIZES of all objects are specified at compile time.

Sometimes We need runtime allocation called dynamic allocation. In static allocation, sizes can't be changed at runtime. Memory lifetimes are tied to function calls which limit the programmer to certain code paths that may not be otpimal.

For programmers to manage the memory within a processes virtual address space effectively, they need to understand the programs memory requirements. They can develop allocation strategies that prevent, memory leaks and dangling pointers. They can also layout memory in a way that optimizes cache usage which makes a programs run much faster.

The two memory traits to keep in mind when developing memory management strategies are the spatial and temporal aspects of memory. A programmer can design an allocation strategy based on the answers to the following questions

- How much memory is going to be needed for each object, or groups of objects? how much in total?

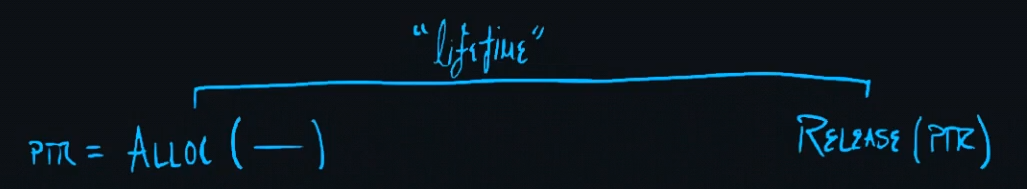

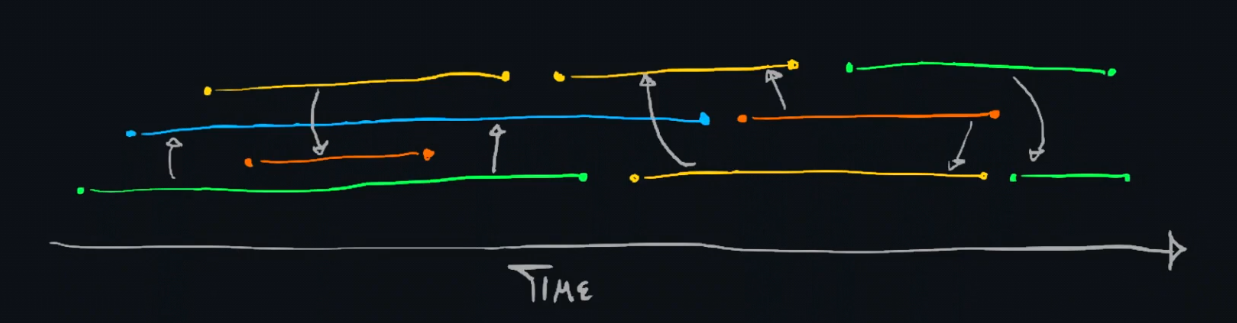

- What is the lifetime of any allocated memory?

A lifetime is defined as, how long some memory is going to have to stay allocated for any given object or groups of objects. The timespan from allocaion to release is the lifetime.

The generic allocator interface (runtime allocation)

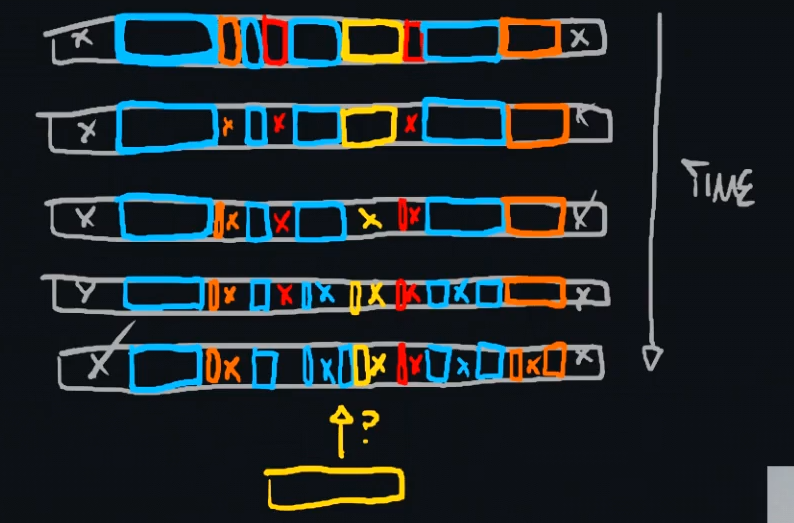

the generic general purpose allocator asks the operating system for a large block of memory, using sbrk() or mmap() syscalls. The GPA mantains a datastructure to keep track of which part of the block has been used and which is free.

When the program asks for memory, the GPA SEARCHES for a free chunk and passes the pointer back to the program. It then marks it as used.

When the program calls free, the GPA updates the datastructure to mark that the chunk is now available.

Notice that everytime the programmer requests memory, a search takes place. This can be costly.

There is a tendency towards fragmentation whenever a single block of memory is divided into chunk of varying sizes.

Malloc and Free

On linux systems glibc, provides an General Purpose allocator for us.

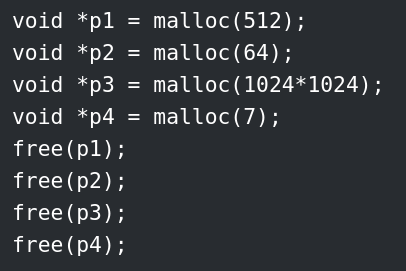

In c The glibc allocator we use to allocate memory is called malloc, we call malloc() to allocate memory and free() to deallocate memory. In c++ we use the "new" and "delete" keywords to do the same. malloc() and free() are function calls provided by glibc,the c std lib, they are not syscalls.

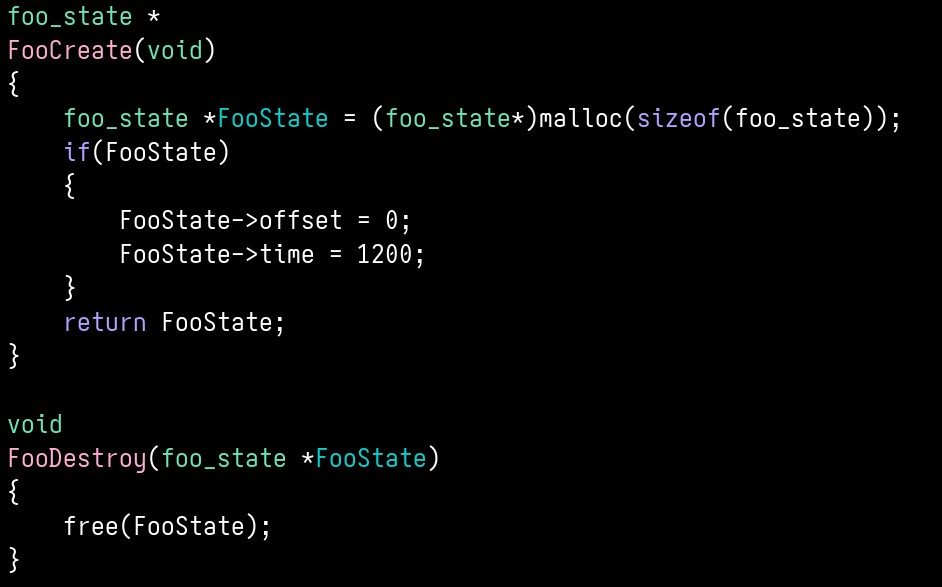

The typical usage rule is for every malloc() you call, you must call a free(), at some later time. Often Programmers use the General purpose allocator in away that malloc's and free's whenever a new object needs to be created using functions (constructors) for allocating memory and initiazling and other functions (destructors) for dealocating memory

You can create an object of type foo and pass around a pointer to it, whenever you need.

OUR VERY OWN Generic Allocator

Before we go on to discuss the drawback of this usage pattern, Let's buid our own version of malloc.

Over the next several slides we will develop a VERY simplified version of a Generic Allocator

A couple of definitions to keep in mind

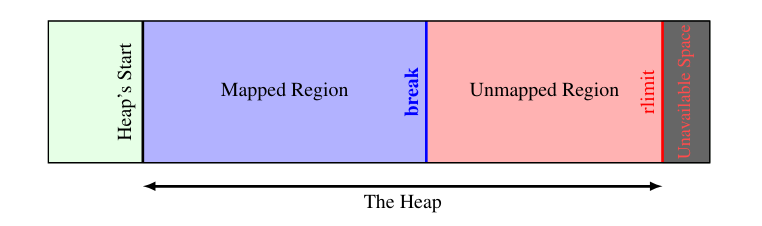

- Heap - A contiguous region of memory that can be subdivided into chunks to be allocated.

- Chunk - A small range of memory, of various size, within a heap that can be allocated

Each chunk of memory has a header of chunk metadata stored immediately prior to it. These headers form a link list.

Code review

Generic AllocatorGPA pattern warnings

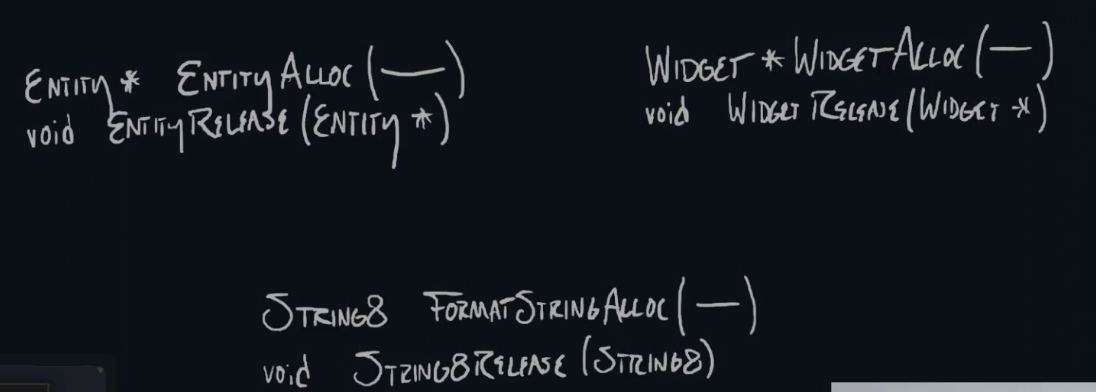

when you follow the previous pattern you end up with code like this, where every single object in your program, that can't be statically allocated, has pairs of functions for alloc and release.

This leads to this pattern being used for very granular “objects”, which explodes the number of actual dynamic allocations and deallocations, potentially having to track 1000's of lifetimes, that may be interdependenat on one aonther. This makes it far more likely that a programming mistake occurs.

the programmer is responable for keeping track of malloc's and free's. Each object has it's OWN LIFETIME that the programmer must manage. Mismanagment will lead to "memory leaks" and "dangling pointers", and potential runtime failure.

other strategies

Other strategies try to group objects with similiar lifetimes together, with the ability to free all there memory with a single instruction. Which makes mgmt less error prone, but also does not bear the overhead incurred by using a garbage collector

A memory leak is when your program continually calls malloc and never free's the allocated memory. This eventually leads to your program eventually crashing, when it uses up it's entire address space.

A dangling pointer, is a pointer that is pointing at an address that has been free's already. So any operations using that pointer lead to some very unexpected results.

stable and fast allocation

We can write programs that do not encounter failure cases, by setting hard limits on the amount of memory we are going to use for each subsystem and grouping objects of similiar lifetime together.

- bump allocator

- free list allocator

- arena allocator