Virtualization

The most fundamental abstraction the OS provides is that of virtualization

The OS takes a physical resource, like a cpu or the memory and makes it appear as if there are infinite number of them available, so every running program thinks it has it's own cpu, and it's own memory.

It is important to remember a Computer does actually have limited resources. Virtualization has been an essential and crucial aspect, that has allowed computers to become apart of our daily lives. We should not take for granted how much the design of Operating Systems has had an effect on the growth and effectiveness of Computers

What we will cover

- Virtualizing the CPU

- Virtualizing memoery

Every program that executes is a series of individual cpu instruction. Every single instruction accesses some form of memory. It reads and writes to and from some form of memory. That memory can be registers, cache or ram. This happens billions of times per second.

These 2 resources the main forms of virualization we are going to discuss

Virutalization is an Illusion

Every program thinks it has it's own Memory and It's own CPU. It does not, it is only an illusion provided by the OS.

Constraints in providing the illusiion

- Efficiency

- Security

Effieciency

The OS provides the illusion In a way that is effecient. It cannot incur too much overhead for it's own code and operations. If it runs 20 times more slowly it would be considered unusable and ineffective. There is some overhead, but it must be minimal. Remember the OS itelf is a running program. So it must be written in a way as not to consume too much memory or use too many cpu cycles itself.

Security

User programs must be restricted, they should only only be allowed to access data structure in their own address spaces. They cannot be allowed to have access to other programs data structures, Or interfere in other programs operations. If program B has some highly sensitive information, like your bank password, The OS cannot Allow program A to the Program B data structure where that data is stored. This would be a major security leak. So OS must provide isolation.

TIME SHARING aka MULTI PROGRAMMING

How do we take a single CPU and create many virtual cpu's ?

We use a process called TIME SHARING

The basic principal is to give each program a little bit of time, a few cycles on the CPU, each cycle may be less than a nano second.

Each cylce can be thought of as a single assembly instruction. (In actuality not as straight foreward)

You can think of it as changing which program is using the cpu every few nanoseconds.

A | B | C | A | C

The "process"

A process is the fundamental abstraction used by the OS to provide CPU and Memory Virtualization.

A process can be thought of as "code that is in execution" together with the resources that the executing code is using. This idea is what we think of when we think of a "Running Program"

A non running program is just bit pattern stored on disk

A running program involves the CPU, the registers, and memory, That is what we call a process

It is like the difference between a book sitting alone on a shelf in the library vs a book actively being read by someone. When a book is read, the reader processes the letters into words, words into meanings, thoughts, ideas, feelings, imagination and memory

The "process" Components - MEMORY

A process is made up of the following memory components

- Private Memory called it's "address space" that is made up of the following subsections.

- executable object Code of the program

- the heap

- the stack

- an area static variable initialized and uninitialized

"Process" components CPU and other

- CPU Regiseter state. The CPU registers are changing every cycle. e.g.

- the $rip which is the program counter on x86. Every instruction th $pc changes.

- The stack pointer $RSP keeps track of the current stack frame

- IO state - some files and network streams that are open and in use

Mechanisms

If we want to build a time sharing OS, We need to figure what the steps and procedures we are going to follow when we want to change which process is being executed on the cpu. These steps along with the datastructures that are storing the process information for us, are what is called mechanisms.

When I say steps I mean the exact code the cpu is going to execute to switch the cpu context from one program to another. This is code that is a part of the OS. This is mechanism code. An example is an OS Scheduler that switches between processes

Policies

On top of the mechanism we have algorithms that need to make decisions for us about which process should be actively on the cpu, and for how long. These algorithms are decision making algorithms known as policies. These of course are also executable code that is apart of the OS and has it's own datastructures that mantain other process information and statistics.

Policies and mechanisms

Notice that these are separate concepts and implementations. So you can have a particuliar mechanism and plug in different policies to try. Or you can try differnet mechanisms with a single policy.

Limited Direct Execution

Direct Execution

If you want to run a program as fast as possible. That at the speed of the CPU which is billions of instructions per second. You would run your program directly on the cpu. This the fastest and most efficient way to run a program. We are calling this Direct Execution.

Problem with direct execution

If we just let a program have completely control of the cpu it can do what ever it wants. If the os lets a program do whatever it wants, the os no longer able to manage the resources effectively. It's no longer able to provide secuirty or provide a time sharing environment.

Limited Direct Execution

Limited Direct Execution is the name we give to the core low level mechanism concept that an OS uses to Virtualize the CPU and Memory.

We want programs to run as fast as possible, but we still want the OS to mantain control over the systems resources

To allow a process to run efficiently the OS allows it to run directly on the cpu.

But to mantain control, The OS get's involved at certain KEY MOMENTS in the process life cycle by using special functionality built into the HARDWARE and the OS

The OS creates a SANDBOX environment around the running process that allows the process to run directly on the CPU but limits what the process is allowed to do with the CPU.

Timeline: Direct Exectution scenario

Let's imagine that when we boot the computer the OS is the first program that runs. So you turn on the computer, There are some built in instructions in ROM and one of the last on of those instruction says JUMP to the first Instruction(THE ENTRY POINT) of this program called OPERATING SYSTEM

Well the OS program doesn't do anything except help the user run other programs. So when the user makes a request to OS to run a program, In a Direct Execution scenario, the OS would simple set the $RIP register to the first instruction(the entry point) for that program the user wants to run. and the CPU would start executing at that instruction.

At this point the OS would no longer be running.

The user process limitiation

The processes a user runs, we call a USER PROCESS

We want our user processe to run fast so we want it running directly on the CPU

Once a process is running on the CPU how do we control it? IT HAS THE CPU, THE OS DOESNT!??

If a User process is running, what is the OS doing? The answer is nothing, it's NOT running! If the OS is not running how does it make any decisions or control any resources?

User Processes: 3 OS scenarios

- Problem 1. What if the User process wants do something restricted ? how does the os, which is not running, prevent restricted behavior ?

- problem 2.

What it the OS wants to stop one User process and

Start another?

We need to find a way for the OS to start running again so it can choose another program to run next. How does OS regain control of the CPU

- problem 3.What if the user process is doing some slow operations like disk or network I/O, how would The OS know? It's not running!! we do not want to waste CPU Cycles

1.How do we allow for restricted CPU operation by USER processes?

Early developers of OS realized they actually needed support from hardware to help with OS services. So now there is actual OS supporting functionality built into the actual hardware.

MODE: each cpu provides a BIT that allows itself (the CPU) to be set into different modes.

- User Mode

- Kernel Mode(operating system)

In Kernel mode the cpu is completely unrestricted.

In user mode the cpu can't do certain things

CPU modes

- How do we get into one of these modes?

- How do we transition from one mode to the other?

At boot time, system boots into kernel mode. The OS is the first thing that runs and CPU is set in kernel mode

When the OS runs a user program it transitions CPU into user mode. and sets the next instruction to be some entry point in the user program, some actual address of a cpu instruction

When the user program wants to do a something restricted like disk I/O, the user process needs to issue a special instruction

Special User Mode instructions

User processes can't have access to the address space of the kernel because user processes are restricted. So a user process cant just go and set the instruction pointer to some address in kernel space. It doesn't know where the routines are and it doesn't have access to that memroy. It cant have direct access to switch the CPU mode bit.

Review our abstaract understanding of the CPU

In our current understanding of the CPU, it is running a very simple program. That program is:

while(1) { // infinite llop

Fetch instruction from memory //(pointed to by $rip)

decode instruction // (figureout instruction)(incremnet $rip)

execute instruction // (change bit pattern in memory or register)

}

expanding our CPU model

The previous model is a simplistic view and only accounts for running a single program on the cpu. When we our running an OS, we need additional support from the CPU, we need to expand our model of the program the CPU is running. There is builtin Operating System support in the routine. Our CPU loop is expanded to support the OS.

Hardware support for kernel and user mode

there are 2 modes provided by the CPU kernel mode which is completely unrestricted and user mode which is a restricted mode. The CPU has a built in mechanism for swiching from one mode to the other. Inherently this needs to be done in a careful way as to not breach the security of the system.

expanded view

we need to add some understanding to our cpu model

while(1) { // infinite loop

Fetch instruction from memory //(pointed to by $rip)

decode instruction // (figureout instruction)(incremnet $rip)

CHECK PERMISSIONS // is inst ok to execute?

IF NOT OK, RAISE A HARDWARE EXCEPTION(OS GET INVOLVED)

JUMP to An OS DEFINED EXCEPTION HANDLER routine

execute instruction // (change bit pattern in memory or register)

PROCESS INTERRUPTS // after each instruction check for interrupts

}

Interupts

Exceptions/Traps happen during execution of your program they could be

- Your program trying to do something illegal

- Your program calling for services using a system call

Interrupts occur when devices need to get some attention,

- A CPU A hard drive finishes a request

- A key has been pressed

- a mouse moves

- A timer that is set to go off every few milli seconds goes off

Handling Interupts

One more interupts may occur during the fetch decode and execute cycle, Those interrupts will be handled after the execute phase for each instruction.

Each interrupt will have a corresponding Operating system routine defined. If the cpu registers an interupt it will set itself to kernel mode and jmp to the first instruction of the corresponding interrupt routine.

Limited Direct Execution

We allow a user program to run unbridled on the CPU, with some limitiations. But at full speed. And the only time the the OS gets involved is when it is triggerd by Hardware Interupts, Exceptions and Traps.

Timeline review

- @boot time OS runs first. The CPU starts in Privilged/kernel mode

- Using priveleges instructions the OS setups up all excpetions/trap and interupt handlers routines so cpu knows where they are in memory. Handlers are just code.

- OS starts a timer interrupt(using a priveleged instruction) os it will have cpu control at certain time intervals

- Once all that is done, OS is ready to run a user program

interactions: OS service

- OS starts kernel mode(sets everything up)

- Set cpu to user mode and run program A

- Program A wants service so it makes a system call (trap instruction).

Some system calls maybe request for file i/o, create a new process using fork/exec, exit, wait,network i/o, etc...

- In a Trap instruction, The CPU transitions itself from user mode to kernel mode, and jumps into the os at the predetermined trap handlers address. This all happens in the hardware, the User code does not do any of this.

- The CPU must also save the register state of the running process so we can resume execution later.

Return from trap

When the OS is done handling the trap, we return to the running program using the return from trap instruction, In the return from trap, The registers are restored and the cpu sets itself back to user mode. The $rip will be pointing to the next instruction to execute and cpu will continue

Even when we start a program for the first time, the OS uses a return from trap instruction. The OS will set the $rip to the main entry point and call return from trap.

What happens if program A doesnt ever cause an exception or trap How does the OS regain control?

- OS starts kernel mode(sets everything up) including timer

- Set cpu to user mode and run program A, infinite loop

- A timer interupt is set in cpu, the os handler runs

- The handler can choose whether to keep running Program A or switch out to Program B, this is known as a CONTEXT SWITCH

- OS calls return from trap to the program it chooses

Process List

OS must keep track of all processes running on the system. Each process will have a bunch of info associated with it in some kind of Data Structure, and the OS will keep a linked list, tree or array of all processes on the system

Process State

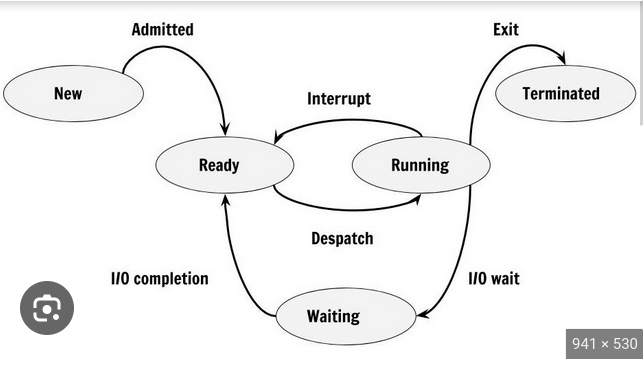

One aspect that of a process to keep track of is the process state. A process can be in one of several state.

- READY: Many processes can be in this state at a given time they are waiting to be run.

- RUNNING: only one process is running at a time.

- BLOCKED:

Some operations like i/o are very slow compared to processor speed. If a process is waiting for some slow operation, it gets placed in a block state

When the interupt timer occurs the OS can go thru the process list and see which processes are READY and then decide which one to put into RUNNING state.

Blocked Processes timeline

If you imagine process A running on the cpu, it calls a trap to read from disk. considering a cpu cycle is .3 nano seconds and reading from disk is 10 m/s, if the cpu did not move the process A into a BLOCKED state, it could potentially waste thousands of cpu cycles. CPU utilization must be optimized. We need to keep process A out of the CUE of READY processes cue until the i/o is done.

OS scheduling policy

We now have the mechanism we can use to switch processes. Now we need to make decisions about which process to run and when.

CPU Scheduler

What we have been speaking about is the mechanism portion of the LIMITED DIRECT EXECTUTION protocol. Now we will move on to understanding the polices a process scheduler may use to decide which process to run next. The scheduler is the part of the OS that implements policies regarding when to switch processes on and off the CPU.